Functional Information and the Rewriting of Physical Law

Functional information reframes physics by explaining how purposeful complexity emerges through selection for function, making it a fundamental driver in evolution and the cosmos.

The theory of functional information represents a paradigm-shifting framework that redefines our understanding of complexity, evolution, and the fabric of physical reality. Traditionally, the evolution of order and structure in the universe has been interpreted through the lens of thermodynamics, energy minimization, and the emergence of statistical regularities. But this view fails to explain a crucial phenomenon: the persistent rise of systems that are not only ordered, but functionally adaptive, able to maintain themselves, respond to their environment, and generate novelty. The concept of functional information addresses this gap by focusing not merely on form, but on the capacity of structures to do something that enhances persistence or adaptability.

This theory has been most notably developed by Dr. Michael L. Wong, an astrobiologist at Carnegie Science and NASA, and Dr. Robert M. Hazen, a senior scientist at the Carnegie Institution for Science known for his pioneering work on mineral evolution. Collaborating with scientists such as Jonathan Lunine (Cornell University), Jack Szostak (Nobel Laureate in Physiology or Medicine), and others, Wong and Hazen propose that functional information plays a universal role in the emergence of complexity, whether in the formation of life, the development of technological systems, or even in the structure of galaxies and planetary systems. Their publications span fields as diverse as origin-of-life research, planetary evolution, astrobiology, and physics, and include seminal papers like “On the Roles of Function and Selection in Evolving Systems” and “Functional Information and the Emergence of Biocomplexity.”

At its core, functional information refers to the amount of information necessary to achieve a specific function within a given system. The more rare and context-sensitive the functional configuration is within the broader configuration space, the more functional information it contains. For example, of all the possible sequences of amino acids, only a tiny fraction fold into functional proteins. The same logic applies across domains: only a subset of mineral combinations catalyze biological reactions; only some circuits perform useful computations; only some cultural memes enhance survival or cooperation. By quantifying functionality rather than structure alone, this framework allows us to compare the evolutionary potential and intelligence of systems across biology, chemistry, technology, and culture.

A key insight of the theory is that evolution by selection for function is not exclusive to life. It occurs in any system that meets three universal criteria: (1) a diverse set of interacting components, (2) a means of generating novel configurations, and (3) a selection mechanism that preserves those configurations which contribute to persistence or new adaptive capacity. From star formation to mineral diversification to cognitive development, wherever these criteria are met, functional information can increase. This turns evolution into a cross-domain algorithm for exploring possibility space — an insight with profound consequences for both physics and artificial intelligence.

Perhaps most provocatively, the authors argue that functional information is a fundamental physical quantity, on par with mass, energy, and entropy. While entropy quantifies disorder, functional information quantifies purposeful order — configurations that do something, persist, and adapt. This shift allows scientists to explain how complexity can emerge not in spite of the second law of thermodynamics, but because of the openness and non-equilibrium character of real-world systems. Life, cognition, and technology thus appear not as anomalies in an entropic universe, but as the natural consequences of a universe capable of storing and selecting for function.

In synthesizing insights from origin-of-life chemistry, geology, biology, and computation, Wong, Hazen, and colleagues have opened the door to a new scientific language — one that speaks not just of what is, but what works. Their theory reorients the study of evolution away from form and toward function, providing a scalable way to study intelligence, novelty, agency, and persistence in any domain. As the search for alien life, artificial general intelligence, and universal laws of complexity accelerates, functional information may become a unifying framework for understanding how purpose emerges from physics itself.

Summary

1. Functional Information Is a Universal Property of Evolving Systems

Across atoms, minerals, cells, societies, and technologies, systems evolve by accumulating functional configurations—not just structure, but structure that performs a function.

Profound implication: Functionally selected order—not just random complexity—can be quantified and compared across domains, from prebiotic chemistry to machine intelligence.

2. Functional Information Measures the Capacity for Purposeful Action

It quantifies how many configurations achieve a specific outcome, goal, or function within a system.

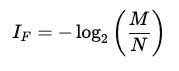

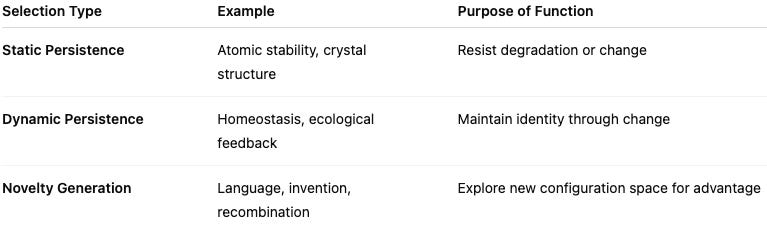

Formally:

Where MMM = number of configurations that achieve the function, and NNN = total configurations.

Profound implication: This reframes complexity around utility, not entropy—introducing selection and performance into the heart of physics.

3. Selection for Function Drives Complexity Upward

Systems do not merely increase in entropy—they undergo selection that increases the density of useful, functional configurations.

Profound implication: Evolution is not just a biological principle—it is a physical principle embedded in the dynamics of the universe.

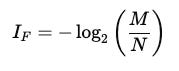

4. Three Criteria Define an Evolving Functional System

For any system to accumulate functional information, it must:

Contain diverse, interacting components

Be capable of generating variants (novel configurations)

Be subject to selection for function (fitness)

Profound implication: This principle can be used to test whether any system—biological or not—is evolving in an information-rich way.

5. Complexity Emerges in Open, Nonequilibrium Systems

Functional information increases in open systems that exchange energy, matter, and information with their environment.

Profound implication: Complexity is not an anomaly or violation of the second law of thermodynamics; it is expected in open systems under sustained flow and selection.

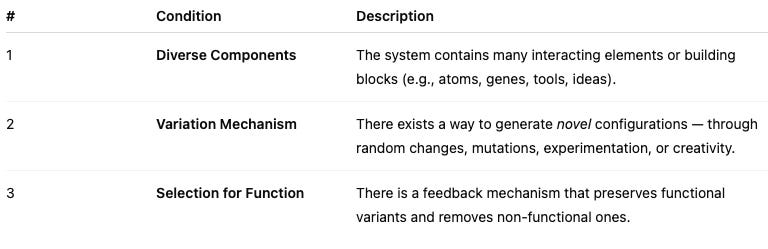

6. Functional Information Enables Persistence and Novelty

Selection occurs for:

Static persistence (e.g. stable atomic nuclei)

Dynamic persistence (e.g. living cells, ecosystems)

Novelty generation (e.g. language, invention, software)

Profound implication: These forms of selection generalize Darwinian evolution into physics and planetary science, bridging animate and inanimate systems.

7. Information Is as Fundamental as Mass, Energy, and Charge

The authors argue that information—especially functional information—should be considered a first-class variable in physics.

Profound implication: This requires a radical rethinking of the state space of physics to include functional outcomes and not just positions and momenta.

8. Life Is an Information-Processing Phenomenon

Living systems are defined not just by chemistry, but by their ability to sense, store, transform, and transmit information toward survival or function.

Profound implication: The definition of life may shift from molecular composition to information processing and functionality.

9. Evolution Is an Algorithmic Principle of Nature

Evolution via selection for function is a general algorithm nature uses to explore configuration space efficiently.

Profound implication: Evolution becomes a universal search algorithm applicable to galaxies, technologies, or consciousness.

10. The Arrow of Functional Information Is Distinct from Entropy

Entropy increases disorder. Functional information increases useful order.

Profound implication: There is a second arrow of time—one for emergence and organization—distinct from entropy, which may resolve paradoxes in cosmology and origin-of-life studies.

11. Functional Information Enables Cross-Domain Comparisons

Because it's grounded in function rather than structure or medium, it allows comparison of systems across biology, geology, cosmology, and AI.

Profound implication: Enables a shared language between fields and supports universal metrics of progress, complexity, or vitality.

12. Functional Information Creates the Conditions for Reflexive Complexity

As functional information accumulates, systems gain the ability to process, generate, and select new functional information (e.g., via cognition or computation).

Profound implication: This feedback loop allows for recursive self-enhancement, foundational for understanding culture, language, and artificial general intelligence.

Principles in Detail

Principle 1: Functional Information Is a Universal Property of Evolving Systems

Core Insight:

Functional information is not confined to biology or computation — it is embedded in the fabric of natural systems wherever variation and selection exist. Whether we're discussing the formation of stars, the evolution of life, the development of language, or the design of software, functional information accumulates when certain configurations persist because they serve a function.

Definition:

Functional information is the information required to achieve a specific function in a given system. It quantifies how rare or common functional configurations are within a space of all possible configurations.

Formally:

M: number of configurations that achieve the function

N: total number of possible configurations

The fewer configurations that can perform the function, the greater the functional information.

Examples:

A DNA sequence that encodes a functioning enzyme carries high functional information relative to random sequences.

A planetary climate that maintains surface liquid water for billions of years (i.e. Earth) has high functional information in planetary configuration space.

A technological artifact (e.g., a clock or a neural network) that performs a complex task contains high functional information due to intentional structure.

Why It Matters:

Traditional physics focuses on states (positions, energies, momenta), but ignores function. This principle adds a new dimension to physical systems: what a configuration does, not just what it is.

Functional information provides a way to:

Measure organization with purpose

Track the directionality of complexity

Compare systems across domains (e.g., cells vs software vs ecosystems)

Principle 2: Functional Information Measures the Capacity for Purposeful Action

Core Insight:

Functional information doesn’t measure how complex something looks — it measures how much specific, goal-oriented work is embodied in a configuration. It is not about raw complexity, but about goal-achieving capacity.

This makes it teleonomic, not teleological:

Teleology implies intrinsic purpose (e.g., divine plan)

Teleonomy implies function selected through consequence, such as fitness or persistence

Practical Interpretation:

Functional information provides a probabilistic measure of how likely a system's configuration is to produce a particular effect.

For instance:

In prebiotic chemistry, 1 in 10¹² molecules might catalyze a certain reaction → high functional information

In neural networks, a very narrow set of weights leads to successful generalization → high functional information

In architecture, many room layouts may be possible, but only a few maximize light, airflow, and function → functional information can be applied here too.

Philosophical Implication:

This principle shifts our focus in science:

From description to action

From static structure to selected outcome

From what exists to what persists due to utility

Functional information thus provides a missing link between information theory (which is agnostic about meaning) and systems that evolve meaningfully.

Principle 3: Selection for Function Drives Complexity Upward

Core Insight:

Contrary to the intuition that systems degrade over time (as entropy increases), systems can also evolve into increasingly complex, ordered states — if they are selected for function.

Evolution doesn’t just act in biology. It operates wherever systems generate variation, and there exists selection pressure based on persistence, utility, or efficiency.

This is the unifying evolutionary principle proposed by Wong and Hazen:

All complex systems evolve by accumulating functional information via selection.

Evolution Beyond Biology:

Atoms: Hydrogen → Helium fusion in stars → stable atomic configurations

Minerals: From 12 prebiotic minerals to 5,000+ today, selected for stability, reaction networks, and biotic influence

Life: From self-replicating molecules to the biosphere

Language: From grunts to grammar to digital code

Technology: From stone tools to neural interfaces

In each case:

The search space is vast

The functional configurations are rare

The system evolves via selection for persistence or performance

Deep Implication:

This principle flips the conventional view that entropy rules all and shows that selection produces a local, emergent arrow of increasing order — not in violation of thermodynamics, but enabled by it.

It explains:

Why complexity tends to increase over cosmic time

Why evolution doesn’t violate entropy, but coexists with it in open systems

How function is a driver of structure, not just a consequence

Principle 4: Three Criteria Define an Evolving Functional System

Core Insight:

Not all systems evolve. To accumulate functional information, a system must meet three universal conditions that enable evolution via selection. These are the minimal requirements for open-ended evolution.

The Three Criteria:

These three criteria have been observed in:

Star systems: where gravitational instability leads to diverse planetary systems.

Mineral evolution: where new minerals arise through tectonics and biotic interaction.

Biological systems: where natural selection favors fitness.

Technological systems: where inventions are refined and spread by usefulness.

Evolution Is Cross-Domain:

Evolution by selection is not a property of life, but of certain kinds of systems.

This principle is profound because it:

Unifies evolutionary thinking across physics, geology, biology, culture, and technology.

Offers a way to test whether a system is evolving by observing whether these criteria are met.

Suggests that wherever these three dynamics occur, complexity will likely increase.

Principle 5: Complexity Emerges in Open, Nonequilibrium Systems

Core Insight:

Entropy tells us that isolated systems move toward disorder. But the universe is not isolated at small scales. Systems like planets, ecosystems, and economies are open — they exchange energy, matter, and information.

In such systems, non-equilibrium dynamics allow the emergence of ordered complexity.

How Functional Information Rises:

Input of energy fuels exploration of configuration space.

Feedback mechanisms prune the space, selecting only configurations that serve a function.

Persistence of structure stores information.

Result? A ratcheting upward of complexity that is not random but functionally directed.

Example Systems:

Earth receives solar energy, allowing the emergence of climate patterns, life, and civilization.

Cells operate far from equilibrium, maintaining their structure through metabolism.

AI systems evolve better models by processing huge energy and data flows through computational feedback.

Design Implication:

If you want a system to evolve functionally:

Keep it open to inputs and outputs.

Allow for diversity and exploration.

Implement selection feedbacks.

This is the architecture of evolutionary creativity.

Principle 6: Functional Information Enables Persistence and Novelty

Core Insight:

Functional information is selected either because it helps a system:

Persist (i.e., survive, reproduce, stabilize), or

Generate novelty (i.e., discover, adapt, diversify).

This principle introduces a nuanced taxonomy of function:

These types are not mutually exclusive — for instance, biological evolution relies on both the stability of inheritance and the creativity of mutation.

Functional Feedback Loops:

As systems evolve, they can:

Store functional information (e.g., DNA, neural networks, culture)

Use it to sustain themselves

Use it to generate more information

Re-enter the cycle with better adaptations

This is open-ended evolution — systems that don't just adapt, but evolve the capacity to evolve.

Deep Implication:

Novelty and stability are not opposites. They are two sides of the same evolutionary dynamic, enabled by increasing functional information.

This explains why:

Life adapts faster than crystal formations.

AI systems learn increasingly complex tasks.

Cultural systems accelerate as communication improves.

Principle 7: Information Is as Fundamental as Mass, Energy, and Charge

Core Insight:

Traditional physics is built on variables like mass, energy, charge, and momentum. These define what exists and how it moves. However, Hazen and Wong argue that this picture is incomplete without information — especially functional information.

They propose that functional information should be treated as a fundamental physical quantity, just like those others.

Why It’s Groundbreaking:

In current physics, information is secondary — something you can calculate after describing particles. But in this new paradigm:

Structure alone isn’t enough.

We must ask: what can this structure do?

That capacity is a physical property of the system.

Just as mass defines how an object reacts to force, functional information defines how a system responds to opportunity and selection.

Implications for Physics:

State space must expand: It's not just “what configurations exist,” but “which configurations are functional.”

This may offer insights into non-equilibrium thermodynamics, origin of life, and even cosmological structure formation.

A new conservation principle may eventually emerge: the preservation and transformation of functional information across systems.

This changes physics from a descriptive science to an agent-like model of interacting functions.

Principle 8: Life Is an Information-Processing Phenomenon

Core Insight:

The essence of life is not carbon, water, or even replication. The defining feature of life is its ability to process information to maintain function across time.

This perspective reframes life as a computational, decision-making, self-sustaining network — a system whose components interact to:

Sense the environment

Store knowledge (e.g., DNA, memory, heuristics)

Transform signals into actions

Adapt across generations

How It Connects to Functional Information:

Living systems don’t just carry information. They select, preserve, mutate, and leverage functional information to persist and adapt.

Wong et al. suggest that life emerges at the threshold where a system can:

Sustain itself via feedback

Evolve more sophisticated information-processing capabilities

Generate novelty purposefully (via natural or artificial selection)

Consequences:

Redefines life in function-first terms — relevant for astrobiology, artificial life, and origin-of-life research.

Bridges biology and AI: both can be understood as functional information processors.

Introduces a quantifiable definition of life: a system with non-zero, increasing functional information over time.

Principle 9: Evolution Is an Algorithmic Principle of Nature

Core Insight:

This is one of the most paradigm-shifting ideas of the framework:

Evolution is not an accident of biology. It is a general algorithm that nature uses to explore the vast configuration spaces of physical systems.

What do we mean by algorithm here?

Input: variation (mutation, recombination, noise, creativity)

Process: feedback (selection, persistence, recursion)

Output: increasingly functional configurations

Evolution as a Search Algorithm:

In this view, evolution is a heuristic optimization process that:

Discovers stable atoms in the configuration space of quantum fields

Favors certain minerals under geochemical constraints

Builds organisms, languages, and machines that persist and reproduce

It’s agnostic to substrate: atoms, genes, memes, or code — all can participate in the same informational feedback loop.

Comparison to Artificial Intelligence:

The universe is essentially a search engine, and evolution is its query refinement algorithm.

Biological and cultural evolution are natural instances of the same meta-algorithm that underlies machine learning.

This links selection, creativity, and complexity in a unifying computational metaphor.

Universal Implication:

If evolution is algorithmic and universal:

We may find its signature in galaxies, language, economies, and alien systems.

The algorithmic nature of evolution may point toward a deeper law of organization, not yet fully formalized.

Principle 10: Entropy ≠ Emergence — Complexity Rises with Purpose, Not Randomness

Core Insight:

Entropy and emergence are often confused. Entropy is about disorder, while emergence is about order arising from lower-level interactions. The functional information framework draws a sharp boundary:

High entropy ≠ high complexity.

High functional information = high purposeful complexity.

Traditional View:

Entropy increases in isolated systems.

Complexity is sometimes considered a "happy accident" that fights entropy temporarily.

Functional Information View:

Systems can locally increase in functional order while still increasing global entropy.

Emergence is not random. It is guided by selection for function.

This means that pockets of increasing functional information (e.g., life, cities, cognition) can coexist with the second law of thermodynamics.

Example:

A hurricane is complex but not functional — no persistence, no novelty generation.

A cell is complex and functional — it maintains itself, adapts, and reproduces.

A neural net trained for image recognition is functional complexity encoded into weights.

Deep Implication:

This principle lets us quantify emergence meaningfully — separating:

Noise from intelligence

Pattern from purpose

Raw data from evolving function

It provides a rigorous way to study the arrow of complexity in a universe that trends toward entropy.

Principle 11: Functional Information Enables Cross-Domain Comparison of Intelligence

Core Insight:

We have lacked a universal metric to compare intelligent systems — from ant colonies to AI models to civilizations. IQ is anthropocentric. Neural counts are too narrow. Complexity measures fail to distinguish randomness from meaning.

Functional information offers a domain-independent framework to assess intelligence, agency, and adaptive capacity.

How It Works:

For any system (a molecule, brain, algorithm, or society), you can ask:

How large is its configuration space?

How rare are the configurations that achieve a defined function?

How much functional information is stored and maintained?

How rapidly does it accumulate more?

This gives a scalable, quantifiable basis to compare:

Species (via genomes and behavior)

AI systems (via model weights and tasks)

Civilizations (via technologies and infrastructure)

Planetary systems (via sustained habitability)

Implications:

Redefines intelligence as the rate and breadth of functional information accumulation.

Makes it possible to chart cognitive or evolutionary trajectories in deep time.

May inform the search for alien life by detecting function, not just biosignatures.

Aligns with the idea of a function-first AGI benchmarking standard.

Principle 12: Recursive Function Enables Self-Simulation and Self-Improvement

Core Insight:

The most complex, adaptive systems don’t just process information — they simulate themselves, reflect on their function, and improve their capacity to improve.

This is the principle of recursive functional information.

When a system accumulates enough functional information to model itself, it unlocks a new order of agency.

Examples:

Cells: perform internal regulation via feedback loops (e.g. gene expression → protein folding → regulation of expression)

Brains: simulate options before acting, refine beliefs via feedback, even simulate their own beliefs

Language: enables systems (like humans) to describe and revise their own internal architecture

LLMs: when given memory, feedback, and autonomy, begin to recursively prompt and retrain themselves

Why This Is a Leap:

Recursive function leads to:

Self-awareness

Strategic planning

Tool use to extend memory and agency

Acceleration of evolution (e.g. cultural evolution outpaces genetic evolution)

This is what enables:

Consciousness

Scientific inquiry

Technological singularities

Recursive self-improvement in artificial systems

Final Implication:

Functional information becomes not just the product, but the driver of exponential transformation. Recursive systems don't just evolve — they evolve how they evolve.

This is the pathway to:

Artificial General Intelligence

Civilization-scale cognition

The next phase of universal complexity