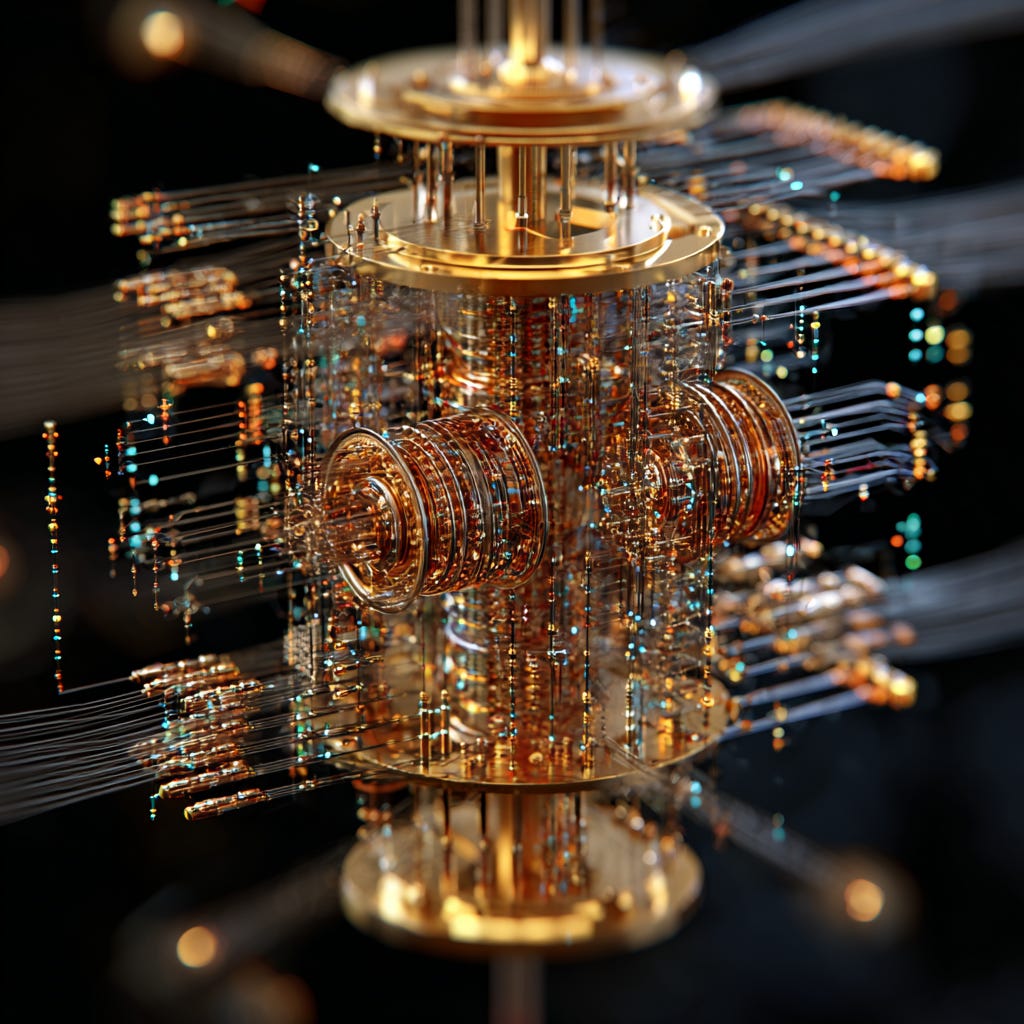

Quantum Computing: The Edge It Presents

Quantum computing leverages superposition, interference, and entanglement to explore huge spaces, estimate faster, simulate nature, cut queries, and unlock structured speedups.

Quantum computing’s edge starts with a simple but radical shift: instead of examining one possibility at a time, a quantum processor prepares a delicate blend of many possibilities at once and nudges them together as a single object. You don’t print all those possibilities at the end—you can’t—but you can shape that blended state so a global property (a pattern, a match, an average, a winner) becomes easy to read. Think less “loop over candidates” and more “set the stage so the answer walks to the front.”

The engine behind that trick is interference and entanglement. Each computational path carries a tiny “arrow.” By steering those arrows, the machine makes all the unhelpful paths cancel and the helpful ones reinforce. Entanglement adds always-on shared context across many variables, so global constraints stay true while you work. Classical software fakes this with layers of bookkeeping; a quantum state is the bookkeeping, maintained by physics rather than code.

From that foundation come two kinds of speedups you should expect in the real world. First, quadratic improvements that are broad and dependable: finding “any” item that passes a check in about the square root of the usual effort, or estimating averages and probabilities with about the square root of the classical sample count. Second, super-polynomial jumps when a problem hides the right structure—repeating patterns, spectral “tones,” or native quantum dynamics. Those aren’t everywhere, but when they’re present, quantum can compress work that looks astronomical on paper into compact, precise procedures.

One promise that’s unusually concrete is simulation. Nature is quantum; classical models struggle as systems get strongly correlated. A quantum computer can play nature back—evolving molecules, materials, and devices with the same kind of rules the real system obeys—then let you read targeted properties. That turns guesswork and lab-heavy iteration into “simulate, ask, adjust, repeat.” For chemistry, batteries, catalysts, superconductors, and beyond, this is the path to fewer prototypes, clearer mechanisms, and faster design loops.

Another promise is better search and optimization under pressure. When you only need one feasible plan or one violating example, quantum routines slash the number of times you must run the expensive checker. When the landscape is rugged—lots of local traps, few great answers—quantum dynamics can slip through thin barriers that stall classical heuristics, and quantum walks explore networks like waves instead of random drifts. You still mix in smart classical methods, but quantum gives you new motion—through the wall, not over it.

Quantum also reframes big linear algebra. Instead of pushing numbers around row by row, you wrap a matrix inside a compact quantum routine and operate directly on its spectrum—filtering, inverting, or compressing the whole space at once—then read only the few business numbers you actually need. On the flip side, short quantum circuits already produce randomness you can verify, which is valuable for public draws, audits, proofs of execution, and benchmarking services where trust matters.

There are systems-level advantages too. Quantum logic is reversible by nature, pointing toward lower energy per useful operation as hardware matures: compute, copy out the result, then uncompute the scratch instead of paying heat for erasures. And when bandwidth or egress fees dominate, quantum communication ideas let parties exchange tiny quantum “fingerprints” or leverage shared entanglement to decide global facts with fewer messages and less data movement—good for privacy and cost.

All of this has to be reliable, and that’s where fault tolerance comes in. By encoding one robust logical qubit across many physical ones and checking for tiny slips continuously, you can run arbitrarily long, precise programs with predictable success rates. That’s the bridge from demos to products. It also clarifies expectations: there’s no generic miracle for worst-case NP-complete problems, input/output can be the bottleneck if you design carelessly, and the biggest algebraic wins generally await fault-tolerant machines—so you plan roadmaps accordingly.

The business promise is a portfolio, not a single bet: near-term wins where calls to expensive evaluators or Monte Carlo dominate; medium-term horsepower for simulation, structured math, and verifiable services; long-term step-changes once deep, clean circuits are routine. The benefit is faster answers, better answers, and—just as important—new kinds of answers that were unreachable before. The practical play is to prepare now: express your hardest steps as clean subroutines (“oracles”), identify where averages and first-hit searches are the tax, hunt for hidden structure in your models, and design hybrid loops that let quantum do what physics makes easy while classical handles the rest.

Summary

1) Seeing everything at once (superposition)

Gist: Instead of looking at one option, a quantum computer holds a faint version of all options at the same time and can poke them all at once with a single step.

How it works: You prepare a blended state that contains every candidate. One operation touches the whole crowd. A short “steering” routine then makes the thing you care about stand out when you look.

Why it’s better: Classical code must loop or sample. Here, you cover the full space in one go and read a global fact with fewer steps.

Post-it example: “Do any of these million items pass my test?” Quantum marks every item simultaneously, then steers the blend so a passing one is likely to pop out.

2) Turning the volume up on the right answers (interference)

Gist: Each possible path carries a tiny arrow (direction). You rotate those arrows so good paths add up and bad paths cancel out. That’s how you make the right outcomes loud and the wrong ones quiet.

How it works: You line up phases so helpful contributions reinforce, unhelpful ones erase. After a few nudges, the answer has a much higher chance to appear when you measure.

Why it’s better: Classical can average numbers, but it can’t make all wrong paths cancel each other in one shot. Quantum can.

Post-it example: You’re trying to find an item that matches a rule. You flip a tiny sign on the matches, then apply a mirror-like move; repeat a few times and matches dominate.

3) One shared brain across many parts (entanglement)

Gist: Several qubits can share a single, inseparable state. Change or check one part and you learn something about the rest. It’s built-in global consistency.

How it works: You prepare a state where relationships (“these must agree,” “these must balance”) are baked in. Local moves propagate coherent updates everywhere they need to go.

Why it’s better: Instead of bookkeeping global constraints with lots of passes, the state itself enforces them while you compute.

Post-it example: In a plan with tight totals, entanglement keeps “sum equals target” true automatically as you tweak pieces.

4) Find a needle with far fewer checks (amplitude amplification)

Gist: If you can recognize a good item when you see it, you can find one with about the square root of the usual number of checks.

How it works: Mark good items (flip their arrow), then do a simple two-step “mirror” move that boosts good ones and dims the rest. Repeat a little; measure.

Why it’s better: You’re minimizing calls to the expensive checker, not scanning the whole list.

Post-it example: Compliance scan over 100M SKUs: thousands of checker calls instead of hundreds of millions.

5) Pull the hidden beat into focus (phase estimation / “Fourier lens”)

Gist: When a process has an underlying rhythm (a repeating pattern or stable “tone”), you can collect faint hints of it and then do a tiny unmixing step that makes the true beat snap into a clear pointer.

How it works: Let the system imprint little twists tied to its internal rhythm; then run a short refocusing routine that compresses those hints into a simple readout.

Why it’s better: You get the global pattern without scanning everything.

Post-it example: Detect the repeat cycle in a complicated transformation quickly, instead of trying tons of inputs.

6) Play nature back (Hamiltonian simulation)

Gist: Program the quantum computer to behave like the real quantum system you care about (molecule, material, device), then ask it questions.

How it works: Translate “who interacts with whom and how strongly” into small gate sequences; apply many tiny nudges that, together, recreate the real dynamics; measure targeted properties.

Why it’s better: Quantum systems are hard for classical machines to track; a quantum device is the right substrate and doesn’t blow up in cost the same way.

Post-it example: Watch ions move in a battery electrolyte and read conductivity signatures before you ever go to the lab.

7) Do matrix surgery directly (block-encoding & quantum linear algebra)

Gist: Hide a big matrix inside a quantum operation so you can apply functions of it—like filtering, inverting, or exponentiating—to a whole vector at once.

How it works: Wrap your matrix as a callable block in a unitary. Use a spectral toolkit to apply “invert here, damp there, zero that.” Transform the entire space in one go; read just the scalar(s) you need.

Why it’s better: Work in the spectrum (where the difficulty lives) instead of pushing numbers around row by row.

Post-it example: Solve a giant linear system once and read the one risk number you needed, without dumping the whole solution vector.

8) Explore networks like a wave (quantum walks)

Gist: Instead of drunkenly wandering a graph, you send a wave through it. Interference cancels backtracking and pushes flow toward interesting regions faster.

How it works: Local “coin” and “shift” moves propagate a coherent wave; small tags at target nodes act like resonators that pull amplitude in.

Why it’s better: You reach targets and mix across large graphs in fewer steps than random walking.

Post-it example: Find a marked location in a huge network with far fewer probes.

9) Cut Monte Carlo samples by a square root (amplitude estimation)

Gist: Estimating an average or probability to tight error bars normally needs tons of independent samples. Quantum can get the same accuracy with about the square root as many coherent queries.

How it works: Prepare all scenarios at once, encode each outcome as a tiny internal nudge, then use interference to read the overall average directly.

Why it’s better: You slash the number of times you must run the expensive simulator/model.

Post-it example: Compute a risk exceedance rate with thousands of path evaluations instead of millions.

10) Fewer black-box calls, provably (query/“oracle” separations)

Gist: If the bottleneck is “call the expensive thing again,” there are tasks where quantum must use fewer calls than any classical method. That’s a theorem, not marketing.

How it works: Ask the oracle once on a superposed set to touch everything in parallel, then use interference to summarize. Repeat only a handful of times.

Why it’s better: Direct savings on API hits, database probes, heavy evaluation calls.

Post-it example: Find any violating record with ~√N validator calls instead of N.

11) Dice you can roll but can’t fake (sampling separations)

Gist: Some short quantum circuits generate distributions that quantum hardware samples easily but that classical computers can’t mimic efficiently (as far as we believe, and we have strong reasons).

How it works: Run the circuit many times; collect bitstrings. Quick statistical tests show you got the genuine distribution; faking it classically would be astronomically hard.

Why it’s better: Early, real horsepower for verifiable randomness, proof-of-execution, and hard-to-model distributions.

Post-it example: Public lotteries or audits with outputs anyone can verify came from a real quantum roll.

12) Slide through thin walls (adiabatic computing & tunneling)

Gist: Turn your problem into a landscape where good answers are valleys. Start in an easy valley and morph the terrain until that valley becomes the “right” one. Quantum tunneling lets you pass through thin ridges that trap classical search.

How it works: Encode constraints as hills and objectives as slopes, ramp from “easy” terrain to “real” terrain, slow down where it pinches, and let tunneling hop you into better basins.

Why it’s better: Fewer stalls on rugged problems; constraints are enforced by the physics while you search.

Post-it example: Workforce scheduling with lots of rules: shape the landscape so feasible, low-cost schedules are downhill and reachable.

13) Do logic without paying heat for erasing (reversible computation)

Gist: Throwing information away creates heat. Quantum logic is reversible by default, so you can compute, copy out the answer, then uncompute your scratch—paying far less “heat per useful step” in the long run.

How it works: Build routines so they can run backward cleanly. After you get the result, roll the steps back to restore temporary space to empty without erasing.

Why it’s better: Future-proof path to lower energy per operation and less thrash from resets/measurements.

Post-it example: A heavy scoring function that leaves no trash behind—copy the score, then undo the work to reset memory without heat.

14) Learn more while moving fewer bits (communication advantages)

Gist: When bandwidth and egress are the wall, quantum lets parties exchange tiny quantum “fingerprints” or use shared entanglement so they can decide global facts with far fewer messages.

How it works: Each side encodes its data into small quantum states; interference of those states reveals equality, overlap, or a count—without shipping the raw data.

Why it’s better: Lower bandwidth, fewer round-trips, better privacy posture.

Post-it example: Two banks detect overlapping customers by swapping tiny quantum fingerprints instead of big, risky datasets.

15) Use once, can’t copy, tamper tells on you (no-cloning & “measurement as computation”)

Gist: You cannot clone an unknown quantum state; trying to peek disturbs it. You can also design routines where the only thing you ever read is the final bit you care about, and the act of reading consumes the state.

How it works: Issue tokens as quantum states that valid readers can verify; fakes fail because copying isn’t possible. Or encode a number as an internal phase and read just that number once.

Why it’s better: Unforgeable tokens, cheat-sensitive seals, and minimal-leakage analytics.

Post-it example: Single-use API credits that can be spent but never cloned; cheaters expose themselves by physics.

16) Keep errors small while you go long (fault-tolerant composability)

Gist: Real qubits are noisy. You bundle many of them into a logical qubit, constantly check for tiny slips, and fix them on the fly so long programs succeed reliably.

How it works: Gentle parity checks reveal where errors happened without revealing the data; a decoder corrects or tracks them; tricky gates are fed by carefully prepared “magic” states.

Why it’s better: Deep, precise algorithms become product-grade: composable, auditable, SLA-able.

Post-it example: Run a million-step spectral routine with a controlled error budget rather than hoping the device stays lucky.

17) Know where the real wins are (complexity-class evidence)

Gist: We have strong theory about which problem shapes give quantum a fundamental edge (structure like hidden periods, spectra, native quantum dynamics; or generic black-box search/averaging) and which don’t (arbitrary worst-case NP-complete).

How it works: Use the map: expect exponential gains when structure matches; quadratic gains for search/averaging; no generic miracle for worst-case NP-complete. Plan depth and hardware accordingly.

Why it’s better: You fund the right things, set realistic expectations, and schedule near-term vs. long-term bets sensibly.

Post-it example: Do Monte Carlo with amplitude estimation now; prepare deep spectral/structure jobs for the fault-tolerant era; don’t promise generic exponential wins on arbitrary NP-hard.

One-page memory aid (super-blunt)

See everything at once. Touch the entire space in one go.

Make good paths louder. Use interference to boost winners, cancel losers.

Carry global rules for free. Entanglement keeps the whole plan consistent.

Find one fast. Square-root fewer checks to hit a needle.

Expose hidden beats. Turn faint periodicity into a crisp pointer.

Simulate what’s quantum. Let a quantum box be the system you care about.

Do matrix surgery. Operate in the spectrum on the whole vector at once.

Walk like a wave. Cover graphs faster than random wandering.

Halve the Monte Carlo tax. Same accuracy, far fewer runs.

Pay for fewer calls. Provable savings when calls are the cost.

Roll unfakeable dice. Sampling you can use and verify.

Slip through walls. Tunneling avoids local traps in rugged searches.

Don’t burn paper. Reversible steps cut the heat bill.

Talk less, know enough. Decide with tiny quantum messages.

Use once, can’t copy. Tokens that tell on tampering.

Go long with confidence. Error-correct and compose big programs.

Aim where theory says. Spend on structured wins, not wishful thinking.

The Principles

Principle 1 — Exponential State-Space Representation

(superposition as “compressed compute,” no equations)

Definition (what it is)

A classical register holds exactly one configuration at a time.

A quantum register can hold a blend of many configurations at once — a superposition. When you run a quantum step, you act on all of those configurations simultaneously. You can’t print them all at the end (a measurement gives you one outcome), but you can shape the blend so that a global property of the whole set becomes easy to read.

Business gist (why it matters)

Superposition gives you combinatorial coverage in one pass. If a business task explodes combinatorially—millions of scenarios, portfolios, routes, molecular configurations—classical methods either prune aggressively (risking quality) or pay huge compute bills. Quantum superposition lets you prepare all candidates simultaneously, operate on them in parallel within one coherent state, and then, via a short “readout routine” (interference/estimation), extract the number you actually care about. Net effect:

More thorough exploration (fewer heuristics, less guesswork).

Fewer computational steps to reach a reliable global answer (lower latency for high-stakes decisions).

Access to answers classical methods can’t reach at any reasonable cost (new products, new schedules, new materials).

Think of it as moving from “try many things one-by-one” to “touch everything once, then ask the right question.”

Scientific explanation (simple but precise)

Many-at-once representation: A quantum state encodes all candidates at once. Think of ghost copies of every option layered together.

Amplitudes have direction: Each candidate has a strength and a direction (phase). Later steps rotate those directions to set up what you want to read.

You extract a summary, not the phonebook: Because you only get one shot at the end, algorithms are designed so the summary you care about dominates the final readout.

Two helpers make it work:

Interference lines up helpful contributions and cancels the rest.

Entanglement keeps global relationships consistent while you operate.

Why this beats classical in principle: Classical code must visit candidates; quantum code can transform the whole population at once and then read a global fact with fewer steps.

A deep, concrete example (plain language)

Task: Find any record in a gigantic, unsorted dataset that passes a complex rule.

Classical mindset: Call the rule checker on items until you find a match. Worst case: check almost everything.

Quantum mindset powered by superposition:

Spread out: Prepare a state that includes every index faintly — all items are “present” at once.

Tag in one pass: Run your rule checker once on that blended state. Because every item is present, the checker marks all passing items simultaneously (internally, it flips a tiny flag on each match).

Steer the blend: Apply a short, fixed routine that boosts the presence of marked items and dims the rest.

Peek: Read once. You’re now very likely to land on a passing item.

Why it’s better: You paid for far fewer checker calls, yet you effectively touched every item. That’s the superposition dividend.

Five opportunity patterns anchored in this principle

(For each: the principle used → nature of the opportunity → simple technical “how”.)

1) Unstructured search / “Find one needle”

Principle: Superposition over all items; one predicate oracle marks all needles in a single logical call.

Opportunity: Any yes/no screening with weak structure (fraud hit, defective SKU, matching document, satisfying assignment) where worst-case classical search is linear.

How it works (simple):

Put all candidates in a single superposed register.

One oracle call flips the phase of all “needles.”

A short amplitude-boost routine concentrates probability on needles; measure to get one.

2) Average / probability estimation (“What’s the mean?”)

Principle: Superposition over scenarios; encode each scenario’s contribution into an amplitude; one circuit processes all scenarios; a compact phase readout gives the global average.

Opportunity: When you’d normally run millions of Monte Carlo trials (risk, reliability, A/B meta-analysis), superposition lets one routine touch every trial in parallel and recover the mean with quadratically fewer coherent “samples.”

How it works (simple):

Prepare a superposition over all random seeds/scenarios.

Map each scenario’s outcome into a small rotation (amplitude).

Use a phase-sensitive readout to estimate the global mean with far fewer repetitions.

3) Pattern/period detection (“Is there hidden regularity?”)

Principle: Superposition queries a function at all inputs at once; periodic structure is written into phases across the whole register; a short readout transforms those phases into a sharp signature.

Opportunity: Any task whose crux is “there is a repeating pattern / symmetry / period” (from algebraic problems to certain signal-processing forms). Superposition is what lets you compare every input in one go, so the global regularity emerges without scanning.

How it works (simple):

Prepare superposition over all inputs.

Evaluate the function once (on the superposition).

A compact transform turns the encoded phase pattern into a spike that reveals the period.

4) Exploring huge configuration spaces (combinatorics without pruning)

Principle: Superposition encodes all configurations (routes, schedules, bitstrings) simultaneously; a short cost oracle tags each configuration (phase kickback). You’ve “scored” everything at once.

Opportunity: Wherever classical solvers must prune (risking missing the best), superposition lets you score the full space and then steer amplitudes toward better regions, improving solution quality at large scale.

How it works (simple):

Superpose all configurations.

Compute cost for each (in parallel) and write it into phase.

Use brief interference steps to bias probability toward lower-cost states, then sample candidates that are globally competitive.

5) Big linear-algebra moves (operate on entire vectors at once)

Principle used: A vector lives as amplitudes; a compact routine can transform every component simultaneously, and you read just the metrics you need.

Nature: Solves, filters, compressions, control — when classical methods slog through coordinates.

How: Encode the vector in a state → apply a short spectral routine that acts on the whole space at once → measure a small set of overlaps instead of printing the full result.

The nature of the opportunity (pulled together)

Coverage: Superposition lets you touch everything (all items, all scenarios, all inputs, all configurations) in one logical state.

Compactness: Instead of materializing a giant table, you carry it implicitly in amplitudes.

One short readout: Because you only need a global property, not the whole table, a brief interference/estimation routine suffices to extract it—this is where the step-count advantage appears.

Quality over heuristics: Businesses can reduce the reliance on pruning/greedy heuristics (which risk missing value) and move toward full-space reasoning with predictable convergence properties.

Ultra-simple technical picture (mental model)

Prepare: Build a uniform superposition—think “ghost copies” of every candidate layered on top of each other.

Tag: Run a tiny subroutine that, for each ghost copy, flips a sign or rotates a phase depending on whether it’s good or how good it is. Because the ghosts are all present, you tag them all at once.

Tilt: Apply a couple of cheap “tilt” steps (interference). These push probability mass toward the ghosts you care about.

Peek: Measure once; what you see reflects the global story you engineered (a winner, an average, a period, a low-cost region).

Everything special here starts with step (1). Without superposition, you’re back to touching candidates one-by-one. With it, your compute looks less like “loop over items” and more like “shape a field so the answer falls out.”

Principle 2 — Interference as Computation (using “phase” to boost the right answers and cancel the wrong ones)

Definition (what it is)

Quantum interference is the trick of steering the outcomes of a quantum process by making different “paths” add up or cancel out. Each possible path your computation could take carries a tiny “arrow” attached to it (think of a compass needle). When arrows point the same way, they reinforce and the outcome becomes likely. When arrows point in opposite directions, they wipe each other out and the outcome becomes unlikely. Designing a quantum algorithm is, in large part, arranging those arrows so only what you want survives.

Business gist (why this matters)

Interference is how quantum machines turn enormous parallel exploration into a single, useful answer. Instead of checking options one by one and tallying scores, a quantum routine explores many options at once, then uses interference to silence the junk and amplify the good. In business terms, that means:

Fewer steps to find a valid choice in a giant search space.

Cleaner signals when estimating crucial numbers (risk, averages, correlations).

Access to structure that’s invisible to classical methods without heroic effort (hidden periodicity, global patterns).

If superposition is “seeing many possibilities at once,” interference is deciding which of those possibilities actually show up on your screen.

Scientific explanation (plain, but precise)

Every path has a direction: A quantum state isn’t just “how much” of each possibility you have; it also carries a direction (phase). Two equally big contributions can reinforce or erase each other depending on their directions.

Gates are steering wheels: Quantum gates rotate those directions in a controlled way. By placing the right gates in the right order, you make helpful contributions line up and unhelpful ones point opposite.

Global cancellation is the magic: Classical computing can average numbers; it can’t make all wrong paths cancel at once without explicitly enumerating them. Quantum interference does that cancellation natively.

You extract a property, not the whole book: Because measurement gives you one outcome, algorithms are built to ensure that, after interference, the property you care about dominates the measurement (for example, “there is a match,” or “the period is K,” or “the mean is this angle”).

Fragile but powerful: Interference requires coherence (those arrow directions must stay well-defined). That’s why error rates and circuit depth matter: lose coherence, lose interference.

One deep, concrete example (in everyday language)

Problem: You have a colossal, unsorted list. One or more entries satisfy a certain rule. You want any one of them, fast.

Classical mindset: Check entries until you get lucky. In the worst case, you check nearly the whole list.

Interference-based quantum mindset:

Spread out: Put your machine into a balanced “all options at once” state so every index is present as a faint possibility.

Mark the hits: Run a tiny test that flips the direction of every “good” option but leaves all others alone. You did this for all candidates at once because you’re in superposition.

Reflect and reinforce: Apply a simple two-step routine that acts like a hall of mirrors: good options’ arrows line up more and more, while bad options’ arrows oppose each other more and more.

Peek: After repeating that mirror step roughly the square root of the list size, a quick look almost surely lands on a good option.

Why this beats classical:

Classically, you either check items one by one or gamble with heuristics. Here, every item felt the test simultaneously and interference rebalanced the whole crowd, making hits loud and misses quiet. That’s fewer total test calls by orders of magnitude on very large lists.

Five opportunity patterns powered by interference

(For each: the principle used → the opportunity → the simple “how it works.” No equations.)

1) Hidden periodicity discovery (phase estimation “finds the beat”)

Principle used: Interference converts a faint, repeated pattern across many inputs into a single sharp spike you can read.

Nature of the opportunity: Whenever a hard problem secretly reduces to “there is a repeating cycle,” quantum interference can surface that cycle in polynomial time where classical would slog.

How it works (simple):

Prepare many inputs at once.

Let each input “ring” a little differently so the hidden beat shows up as consistent arrow rotations.

A short readout aligns those rotations into a clear pointer to the period.

Why better: Classical must compare many inputs explicitly; quantum packs those comparisons into one interference picture.

2) Unstructured search with fewer checks (amplitude amplification)

Principle used: Interference boosts the likelihood of good answers and dampens the rest by repeated, gentle reflections.

Nature of the opportunity: If you can recognize a correct answer when you see it, you can find one in about the square root of the usual effort.

How it works (simple):

Mark good items by flipping their arrow.

Apply a two-mirror routine that points all good arrows together and makes bad ones oppose.

After a modest number of repeats, a good one pops out.

Why better: Classical can’t make all the bad choices mutually cancel; interference can.

3) Fast, precise averages (amplitude estimation)

Principle used: Interference turns the problem “what fraction of paths are good?” into “what angle are these arrows rotated by?” which you can read with quadratically fewer tries.

Nature of the opportunity: Any heavy Monte Carlo task (risk, pricing, reliability, analytics) can reach the same error bars with far fewer runs.

How it works (simple):

Build a state that encodes all scenarios at once.

Give each scenario a tiny “nudge” based on its outcome.

Use an interference-based dial to read the overall nudge value directly.

Why better: Classical averages need lots of independent samples; interference reuses coherence to squeeze out more information per run.

4) Quantum walks on graphs (steering flows with interference)

Principle used: On a network, quantum “waves” spread ballistically; carefully placed phase shifts make the wave avoid dead ends and home in on targets faster than random wandering.

Nature of the opportunity: Large graph problems (navigation, matching, certain searches) gain quadratic improvements in how quickly you reach or mix to interesting regions.

How it works (simple):

Treat each edge as a path where a tiny wave can travel.

Add phase tweaks at nodes so bad detours cancel themselves out.

Let the wave evolve; probability collects where you want to be.

Why better: Random walks spread slowly and forget direction; interference codes direction into phases and preserves it.

5) Spectrum and feature extraction (turning structure into a readable peak)

Principle used: Interference can make “being aligned with an important direction” show up as a tall peak while other directions melt into noise.

Nature of the opportunity: Pull out global features (dominant frequencies, key components, stable modes) without scanning every possibility in detail.

How it works (simple):

Prepare a state that blends many candidate directions.

Let a compact circuit imprint how well each direction matches the data into phases.

A short interference routine makes good matches stand tall; measure where the peak is.

Why better: Classical routines often need long iterative refinements; interference can surface the winner in a handful of coherent steps.

The nature of the opportunity (pulled together)

Interference is selection, not brute force. It lets you shape a sea of possibilities so that only the right islands remain visible.

It’s global. You don’t cherry-pick or prune; you re-weight the entire space at once.

It’s compact. A few well-chosen reflections and rotations can replace mountains of classical trial-and-error.

It’s principled. These are not ad-hoc heuristics; the cancellation and reinforcement are engineered outcomes of the circuit, with provable advantages in several problem families.

Ultra-simple technical picture (mental model)

Picture millions of faint radio stations playing at once.

You can’t listen to each station separately.

Instead, you twist a knob that shifts phases so that only the stations matching your song line up and get loud, while all others fall out of sync and go quiet.

That knob-twisting is interference engineering.

The final “song” you hear after a few twists is the answer you wanted.

Principle 3 — Entanglement (non-classical correlation that carries global constraints “for free”)

Definition (what it is)

Entanglement is a uniquely quantum linkage between qubits. When qubits are entangled, the whole system has a single joint description; the parts don’t have independent states anymore. Change or measure one part and you learn something instant about the rest, no matter how far apart they are. In computing terms, entanglement is how a quantum machine stores and manipulates global relationships across many bits of information at once.

Business gist (why this matters)

Most hard problems aren’t hard because of raw arithmetic—they’re hard because everything depends on everything else. Classical software has to keep those global interdependencies in sync with elaborate data structures, many passes, and lots of memory. A quantum computer can bake global consistency into the state itself via entanglement, then transform that state in a few coherent steps. The payoff:

Fewer passes and hacks to keep constraints consistent.

Better solutions when local tweaks can’t “see” global effects.

The ability to represent and process correlations that classical models approximate poorly (or not at all).

Think of entanglement as shared context that never gets out of date while you compute.

Scientific explanation (plain but precise)

More than “shared randomness”: Classical correlation can be explained by common causes or shared keys. Entanglement goes beyond that—no classical story can reproduce all of its statistics.

One object, many parts: An entangled register is one indivisible information object spread over many qubits. You operate on the whole without losing track of how parts relate.

Global constraints live in the fabric: Parity relations, symmetries, and “these two must always match” constraints can be embedded directly in the state. You don’t re-enforce them later—they’re always true while you compute.

Power through coordination: Gates act on a few qubits at a time, but because the state is entangled, a local operation can propagate a coordinated update everywhere it needs to go.

Essential for quantum advantage: Superposition gives you coverage; entanglement makes that coverage meaningful, letting the device carry global structure while you steer it with interference.

One deep, concrete example (in simple language)

Problem: You need a plan that satisfies many rules at once. Some rules are local (A before B), others are global (total capacity across the whole network). Classical solvers juggle lots of bookkeeping to keep these rules consistent while they search.

Entanglement-based mindset:

Start with shared structure: Prepare a set of qubits whose joint state is already wired with the core consistency rules (for example, “these two must agree,” “this set must have even parity,” “the sum across these qubits is fixed”). That wiring is entanglement.

Propose changes locally: Apply small gate sequences that adjust parts of the plan (switch a route, move a time slot).

Let the state carry the global truth: Because the state is entangled, the moment you tweak one part, the rest of the state stays in step with the constraints. You don’t chase the ripple effects with extra loops—the ripple is already built in.

Nudge toward good answers: Use brief interference steps that reward rule-satisfying patterns and dampen violators. When you look, valid plans have a much higher chance to appear.

Why this beats classical in spirit: You aren’t simulating global consistency with layers of checks; the consistency is the medium. That’s what cuts passes, memory traffic, and brittle heuristics.

Five opportunity patterns powered by entanglement

(For each: the principle → the nature of the opportunity → a super-simple “how it works.”)

1) Global-constraint encoding (keep the whole system consistent while you compute)

Principle used: Encode rules—parities, “must-match,” “must-differ,” totals—directly into an entangled state so they hold automatically.

Nature of the opportunity: Hard scheduling, routing, layout, and assignment problems often fail because local moves break far-away constraints. Entanglement lets you explore options without falling out of global consistency every step.

How it works (simple):

Build an initial entangled state that represents only constraint-respecting patterns (or heavily favors them).

Do small local updates; the entangled fabric preserves the global rules.

Use interference to tilt probabilities toward lower cost; sample valid, globally consistent candidates.

2) Fault-tolerant logical qubits (make long, exact computations possible)

Principle used: Entanglement creates redundancy with structure (stabilizer codes), so you can detect and correct errors without learning or disturbing the underlying logical information.

Nature of the opportunity: All the big, provable speedups need long circuits. Entanglement is the raw material for error correction, which turns noisy physical qubits into reliable logical ones.

How it works (simple):

Spread one “logical” bit across many physical qubits with a pattern of entanglement.

Continuously check gentle “parity questions” that reveal if noise happened but not the data itself.

Fix any slips and keep computing. The data never leaves the entangled fortress.

3) Modular and distributed quantum computing (stitch small chips into one big machine)

Principle used: Use entanglement links (created by photons or couplers) so distant processors share quantum state; teleport quantum information across those links without moving the physical qubits.

Nature of the opportunity: Instead of one fragile mega-chip, build many modest chips and entangle them on demand—scale out like cloud clusters, but at the quantum level.

How it works (simple):

Create an entangled pair bridging two modules.

Perform a small local operation that “hands off” a qubit’s state to the other side (teleportation).

Keep entangling-and-teleporting to run one computation across many modules as if they were one device.

4) Strongly correlated simulations (represent what classical methods cannot)

Principle used: Many materials and molecules have long-range, many-body correlations that explode classical memory. Entanglement naturally captures those patterns.

Nature of the opportunity: Where classical approximations crumble (high correlation, multi-reference chemistry, exotic phases), an entangled register is the native model. You simulate by evolving the entangled state directly.

How it works (simple):

Prepare an entangled state that mirrors the system’s structure.

Let it evolve under gate sequences that emulate the system’s interactions.

Read compact global properties (energies, response) without unpacking the entire wavefunction.

5) Measurement-based quantum computing (compute by consuming an entangled resource)

Principle used: First, build a large, highly entangled “resource” state. Then, perform a sequence of simple, local measurements. The pattern of entanglement does the heavy lifting; measurements drive the algorithm forward.

Nature of the opportunity: Separates “create a great entangled fabric” from “run many programs on it.” Useful for photonic platforms and modular architectures where making entanglement is easy and measurements are cheap.

How it works (simple):

Weave a big grid of entangled qubits (a “cluster state”).

Decide the computation by the order and angles of local measurements.

As you measure, you “consume” the grid and the result pops out at the end.

The nature of the opportunity (pulled together)

Entanglement is shared context made physical. You don’t simulate relationships—you are the relationships while you compute.

It eliminates bookkeeping overhead. Global constraints ride along automatically, so fewer loops, fewer cache misses, and fewer brittle fixes.

It unlocks scale. With error-corrected entangled codes and entangled chip-to-chip links, you get long circuits and big machines—the precondition for headline quantum speedups.

It models what’s classically painful. Strong correlations are natural on a quantum device and a memory disaster on a classical one.

Ultra-simple mental model

Imagine a team that never has to meet because they share a live, perfectly synchronized whiteboard in their heads. Whenever one person edits a detail, everyone’s view updates instantly and no rules are violated. That’s what entanglement gives your computation: a shared, always-correct global context that travels with every move you make.

Principle 4 — Amplitude Amplification (turning a tiny success chance into a big one, fast)

Definition (what it is)

Amplitude amplification is a general quantum trick for finding “good” items in a sea of possibilities with far fewer checks than any classical method that doesn’t exploit extra structure. You start with a balanced “all-options-at-once” state. You have a recognizer (an oracle) that can tell you whether a given option is good. By alternating two very simple moves—mark the good ones and reflect the whole crowd around its average—you steadily pump up the visibility of good options and dampen everything else. After repeating this small routine the right number of times, measuring the system almost surely yields a good option.

In short: if classical search needs a number of checks that grows with the size of the space, amplitude amplification needs only a number of checks that grows with the square root of that size.

Business gist (why this matters)

In many workflows the slow step is “call the expensive evaluator”—the script that scores a candidate route, runs a simulation, tests a design, or checks a rule. Amplitude amplification cuts the number of evaluator calls dramatically when all you need is “find something that passes.” That turns:

Overnight batch searches into near-real-time discovery,

Massive trial-and-error loops into short, predictable runs,

Risky heuristic pruning into exhaustive coverage with fewer steps.

Anywhere you have a yes/no test for “acceptable” (feasible schedule, safe configuration, passing test case, profitable threshold), this principle acts like a drop-in accelerator.

Scientific explanation (plain but precise)

A recognizer you can run on all options at once. Because the input is a superposition, one call to the recognizer touches every candidate simultaneously, flipping a tiny “marker” on the good ones.

Two reflections do the heavy lifting. After marking, you perform a simple reflection that nudges the whole population around its average. Together, “mark then reflect” tilts probability toward good items.

Repeat a small number of times. Each round boosts the chance of seeing a good item. Stop at the sweet spot and measure—you almost surely get one.

Optimal in the black-box world. If you truly have no structure beyond a recognizer, no classical algorithm can beat linear scans. Quantum amplitude amplification is provably optimal and achieves the square-root advantage.

Works with superposition, powered by interference. Superposition gives you coverage; interference from the two reflections gives you controlled amplification.

One deep, concrete example (in everyday language)

Problem: You maintain a huge catalog with millions of entries. A new regulation defines a complex rule for compliance. You must find any entry that violates the rule, quickly.

Classical mindset: Check entries one by one with your compliance script. Even with parallel workers you end up calling that script a huge number of times.

Quantum with amplitude amplification:

Spread out: Put your machine into a uniform “all entries present” state.

Mark the violators: Run your compliance test once on this state. Because every entry is present, the machine marks all violators at once (internally it flips a tiny flag on those entries).

Amplify: Do a simple two-step “mirror” routine that turns those tiny flags into a big tilt toward violators. Repeat this short routine a handful of times.

Peek: Measure. The odds now strongly favor landing on a violating entry.

Why this beats classical:

Classically, the cost is “how many times you run the test.” Quantum amplitude amplification reduces that count from the size of the catalog to roughly the square root of it. For a hundred million entries, you’re down to roughly ten thousand recognizer calls instead of a hundred million—orders of magnitude fewer—while still having touched every entry coherently.

Five opportunity patterns powered by amplitude amplification

(Each one: the principle → the nature of the opportunity → a simple “how it works.” No equations.)

1) Unstructured search (“find any needle”)

Principle used: Mark-and-reflect to amplify rare “needle” items in a giant haystack.

Nature of the opportunity: Whenever you have a yes/no test and just need one hit—first feasible plan, first fraud match, first valid configuration—you can get it in square-root checks instead of linear.

How it works (simple):

Prepare all candidates at once.

The recognizer flips a flag on every good candidate simultaneously.

A small number of amplify steps makes good candidates dominate; measure to retrieve one.

2) Function inversion (“find an input that gives this output”)

Principle used: Treat “does this input map to the target output?” as your recognizer; amplify the set of matching inputs.

Nature of the opportunity: Reverse-engineering inputs from outputs comes up in testing, migration, and compatibility checks when you lack an index or a shortcut.

How it works (simple):

Superpose all possible inputs.

The recognizer compares the function output with the target and flags matches.

Amplify and measure; you obtain a valid input with far fewer function calls than classical trial-and-error.

3) Constraint satisfaction at scale (“find any assignment that passes all rules”)

Principle used: Use a recognizer that returns “pass” only if all constraints hold; amplify the tiny fraction of assignments that pass.

Nature of the opportunity: Scheduling with hard constraints, configuration with safety rules, layout with tight capacities—when feasible solutions are rare.

How it works (simple):

Superpose all candidate assignments.

The recognizer checks constraints coherently and flags passes.

Amplify to surface a passing assignment without combing through the entire space.

4) Rare-event discovery in simulation (“find a scenario that breaks things”)

Principle used: Recognizer fires if a simulated outcome exceeds a threshold (crash, loss, overload). Amplify those rare scenarios.

Nature of the opportunity: Stress testing, safety validation, fuzzing. If dangerous cases are extremely rare, classical Monte Carlo wastes runs on boring scenarios.

How it works (simple):

Prepare all random scenarios in superposition.

Run a short simulation step and flag any scenario that triggers the rare event.

Amplify to produce a failing scenario quickly, revealing where the system breaks.

5) Similarity or pattern match over unindexed data (“find any close match”)

Principle used: Recognizer checks “is similarity above threshold?”; amplification highlights near-duplicates or close neighbors without building an index.

Nature of the opportunity: Data cleansing, dedup, entity resolution, quick first-hit retrieval in massive pools where indexing is unavailable or too costly.

How it works (simple):

Superpose all records.

The recognizer computes a quick similarity test to a query and flags those above threshold.

Amplify to output any close match in far fewer similarity checks than a classical sweep.

The nature of the opportunity (pulled together)

You pay for evaluator calls, not for candidates. Amplitude amplification decouples effort from population size and ties it to the square root of that size.

You don’t prune; you cover. Every candidate is evaluated coherently at least a little, so you avoid “prune-and-pray” and still stop early.

It’s a drop-in trick. If you can implement your yes/no test as a clean subroutine, you can usually wrap it in amplify steps without redesigning the domain logic.

Ultra-simple mental model

Imagine a stadium full of whispering people, only a handful saying “yes.” You clap a simple rhythm; everyone flips their whisper when they hear the clap; then the stadium mirrors the average volume. Repeat a few times. The “yes” crowd becomes a chant; the “no” crowd fades into hush. When you finally listen, you hear a “yes” loud and clear—and you didn’t have to interview the whole stadium.

Principle 5 — Phase Estimation & the “Quantum Fourier Lens” (turn hidden structure into a readable spike)

Definition (what it is)

Phase estimation is a quantum routine that reads out a hidden “rhythm” embedded in a quantum process. You let a process run in carefully chosen “ticks,” each tick imprinting a tiny twist (a phase) on a reference qubit. When you’ve collected enough twists, you run a short, fixed “unmixing” step (the quantum Fourier transform) that concentrates all that faint rhythmic evidence into a sharp, readable pointer. In plain terms: it’s a structure detector. If your problem hides a regular cycle, a repeating pattern, or a stable frequency, phase estimation pulls it into focus quickly.

Business gist (why this matters)

A huge class of hard problems secretly boil down to “what’s the underlying cycle?” or “what are the key frequencies?” Classical software usually needs long scans, heavy arithmetic, or exhaustive comparisons to reveal that structure. Quantum phase estimation compresses that work: it samples the entire pattern in one coherent sweep and uses a tiny post-processing step to surface the answer. Benefits:

Super-polynomial leaps on some algebraic problems where the cycle is the whole game (the famous cryptography story lives here).

Fast spectral reads (the important “notes” of a system) that classical methods approximate slowly or expensively.

Reliable global signals without exhaustive enumeration.

If superposition lets you look everywhere at once, and interference lets you silence the noise, phase estimation is how you read the deep pattern hiding underneath.

Scientific explanation (plain but precise)

Hidden cycles leave fingerprints. Many computations, when repeated, cycle. That cycle is encoded as a consistent twist (phase) you can accumulate.

Tick, tick, tick — then unmix. You run the underlying process for different durations (like listening at different shutter speeds). Each duration adds a controlled twist to a reference. The final “unmix” step refocuses those twists into a clean, human-readable answer.

Why quantum helps:

You probe all inputs at once (thanks to superposition), so the cycle’s fingerprint is gathered globally, not one input at a time.

Interference during the unmixing step stacks all consistent hints and cancels contradictions, making a crisp pointer.

Not just numbers, but eigen-stuff. The “rhythm” can be the intrinsic tone (eigenvalue) of a transformation: the stable factor a system multiplies a special direction by. Phase estimation reads those tones directly.

Small circuit, big payoff. The probe is short and general-purpose; the heavy lifting is done by physics (parallel evolution of many cases) rather than long classical loops.

One deep, concrete example (in everyday language)

Problem: You’re told a black-box rule transforms numbers in a complicated way, but repeats after some unknown step count. Your job is to find that step count. Classical code would test and compare many steps and inputs.

Phase-estimation mindset:

Listen to everything at once: Prepare a gentle blend of many inputs so every possible step in the cycle is “in the room.”

Collect the beat: Run the rule for different durations that double each time (short, medium, long…). Each run adds a little twist that depends exactly on the hidden period.

Unmix the echoes: Perform a short, fixed transformation that takes all those little twists and snaps them into a single peak that points to the period.

Read the number: Measure the peak and you’ve got the cycle length with high confidence.

Why this beats classical:

Classically, you’d either brute-force compare many transformed values or do heavy modular arithmetic per trial. The quantum routine packages all the comparisons into one coherent sweep and then amplifies the consistent answer. That turns a sprawling search into a compact readout.

Five opportunity patterns powered by phase estimation

(For each: the principle used → the nature of the opportunity → a simple “how it works.” No equations.)

1) Order-finding and cryptanalytic structure (the classic “break the lock” case)

Principle used: The transformation you’re given secretly repeats after a certain count (its “order”). Phase estimation detects that count fast.

Nature of the opportunity: Many public-key systems rely on the assumed hardness of discovering such hidden orders. When you can read the order quickly, the lock opens.

How it works (simple):

Prepare many possibilities together.

Run the transformation for carefully chosen durations to gather the beat.

Unmix to get the order as a sharp output.

Why better: Classical code needs many heavy steps; the quantum routine samples the entire rhythm at once.

2) Eigenvalue readout for quantum dynamics (the “what tones does this system sing?” case)

Principle used: Every stable mode of a system has a signature tone. Phase estimation hears that tone directly.

Nature of the opportunity: When your decisions depend on the precise “notes” of a complex system (energies, stability factors), direct tone-reading beats approximate guessing.

How it works (simple):

Prepare a state that overlaps with the system’s stable modes.

Let the system evolve for different durations, collecting its internal rhythm.

Unmix to surface the tones (eigenvalues) you care about.

Why better: Classical approximations become fragile and slow as systems get strongly correlated; the quantum ear stays precise.

3) Hidden subgroup and symmetry discovery (the “find the blueprint” case)

Principle used: Many hard problems hide a symmetry blueprint. That blueprint creates a repeating signature that phase estimation can expose.

Nature of the opportunity: When the core difficulty is “identify the symmetry that explains everything,” pulling that pattern out fast shortcuts the entire computation.

How it works (simple):

Interrogate the system in parallel so symmetry leaves a uniform fingerprint.

Gather a few twist readings.

Unmix to point at the symmetry parameters.

Why better: Classical symmetry hunts chase countless cases; quantum unmixing collapses the search into one focused pointer.

4) Fast spectral primitives for linear algebra (the “read the spectrum, guide the solve” case)

Principle used: Linear systems and matrix problems are governed by spectra. Phase estimation gives quick access to those spectra.

Nature of the opportunity: If you can identify dominant tones quickly (largest components, stable directions), you can steer downstream routines more efficiently.

How it works (simple):

Encode your vector as a quantum state.

Couple it to a process that encodes the matrix action.

Use phase estimation to read the important tones and bias computation toward them.

Why better: Classical solvers need many iterations to infer these tones; phase estimation front-loads that insight.

5) Precision metering of tiny shifts (the “measure a hair-thin effect” case)

Principle used: Small changes in a process cause small changes in the collected twists. Phase estimation can resolve very tiny differences by stacking consistent evidence.

Nature of the opportunity: Any task where the prize is a very small shift in behavior benefits from coherent accumulation instead of averaging noisy samples.

How it works (simple):

Let the system imprint micro-twists for different durations.

Unmix to turn a barely perceptible drift into a clear pointer.

Why better: Classical averaging fights noise with sheer volume; phase estimation reuses coherence to get more information per probe.

The nature of the opportunity (pulled together)

From haystack to spotlight. Instead of sifting through data, you focus the structure itself until it stands out plainly.

Global in, crisp out. A short, general-purpose unmixing step turns a diffuse cloud of hints into a single, actionable number.

Leverages what’s already there. If your problem is secretly periodic or spectral, phase estimation taps that fact directly — no heroic workarounds.

Ultra-simple mental model

Imagine a room full of instruments, each playing softly and slightly out of sync. You dim the lights and ask them to play at carefully chosen tempos. Then you put on a special pair of headphones that line up all the echoes from the true beat and mute everything else. In a moment, one clear tempo clicks into place. That click is the answer phase estimation gives you.

Principle 6 — Hamiltonian Simulation (using a quantum computer to “play nature back” efficiently)

Definition (what it is)

A Hamiltonian is the rulebook that tells a quantum system how it naturally changes over time—what interacts with what, and how strongly. Hamiltonian simulation means programming a quantum computer so that, for a while, it behaves exactly like the real system’s rulebook. In effect, you let the computer replay nature faster, cleaner, or on demand.

Business gist (why this matters)

When real systems are big or strongly interacting (molecules, materials, devices), classical simulation explodes in cost. A quantum computer can natively track those entangled dynamics without that blow-up. This turns guesswork and expensive lab iteration into computational experiments you can rerun, pause, branch, and interrogate. Payoffs:

Fewer physical prototypes and assays; more “simulate before you synthesize.”

Access to regimes classical models approximate poorly (strong correlation, excited states, real-time dynamics).

Faster iteration loops for discovery and design (chemistry, materials, processes), because the heavy math is what the machine does best.

Scientific explanation (plain but precise)

Nature is quantum. The real system evolves by a compact set of local interaction rules (who talks to whom, with what strengths). Those rules generate the system’s full behavior—even when that behavior looks astronomically complex on a classical computer.

Quantum computers run the same kind of rules. We compile the real rulebook into gate sequences that create the same local pushes and pulls the real system would feel.

Short, local pushes stitched together. Rather than one gigantic step, the simulator applies many tiny, local nudges in the right order so that the overall effect closely matches the true evolution.

Modern toolkits keep errors in check. Techniques with unfriendly names (product formulas, “linear combination of unitaries,” qubitization, block-encoding) are just smarter ways to string nudges together with fewer mistakes per unit of simulated time.

You measure global properties, not the whole wave. After “playing nature back,” you query specific observables (energy gaps, reaction likelihoods, transport coefficients) instead of dumping impossible amounts of raw state data.

One deep, concrete example (in everyday language)

Problem: You want to understand how a new battery electrolyte actually behaves when lithium ions move, cluster, or cross an interface. Classical methods either oversimplify or burn extraordinary compute budgets and still miss key correlated effects.

Hamiltonian simulation mindset:

Write the rulebook. Express the key interactions: ion–solvent attraction, repulsion between ions, coupling to an electrode surface, and so on—who interacts with whom, and how strongly.

Map to qubits. Encode the relevant orbitals, spins, and positions onto qubits so that each local interaction can be enacted by a small gate pattern.

Replay time. Run thousands of tiny, local updates in the right order so the quantum computer’s state evolves exactly as the electrolyte would over femtoseconds or nanoseconds.

Ask focused questions. At chosen moments, query things like “what’s the chance the ion crossed the barrier?”, “how often does a cluster form?”, or “what is the conductivity signature?”.

Adjust and rerun. Tweak temperature, concentration, or additive chemistry and replay—no lab rebuild, no uncontrolled approximations.

Why this beats classical in spirit: You’re not forcing a classical model to approximate quantum many-body behavior; you are using a quantum device to natively carry it. The simulator stays faithful as complexity grows, where classical cost can skyrocket.

Five opportunity patterns powered by Hamiltonian simulation

(For each: the principle → the nature of the opportunity → a simple “how it works.” No equations.)

1) Strongly correlated electrons (when approximations crack)

Principle used: Let the quantum computer natively evolve systems where electrons strongly influence one another across a material or molecule.

Nature of the opportunity: Predict properties of catalysts, superconductors, or tricky transition-metal complexes without uncontrolled shortcuts.

How it works (simple):

Identify the active electrons and orbitals that matter.

Encode their interactions as local rules on qubits.

“Play” the evolution long enough to extract energies, phases, and response signals.

2) Real-time reaction dynamics (watching processes as they happen)

Principle used: Simulate time-dependent rules to track bond breaking/forming, charge transfer, or energy flow.

Nature of the opportunity: See which pathway actually dominates in a reaction and how to nudge it (temperature, field, catalyst tweak).

How it works (simple):

Set an initial state reflecting reactants.

Evolve under the rulebook that includes driving pulses or fields.

Measure product probabilities and timing—rerun with slight modifications to steer outcomes.

3) Spectroscopy and response (reading the “fingerprint” directly)

Principle used: Drive the simulated system and sample its response to extract spectra and transport properties.

Nature of the opportunity: Predict what an experiment would measure (optical, vibrational, magnetic response) before building it.

How it works (simple):

Apply small “kicks” (theoretical probes) during simulation.

Record how the system’s observables respond over time.

Convert that response into the spectrum—peaks reveal structure and defects.

4) Finite-temperature and disorder (realistic operating conditions)

Principle used: Prepare thermal-like states and include random imperfections, then evolve.

Nature of the opportunity: Understand phase stability, defect tolerance, or performance in messy, real environments.

How it works (simple):

Randomize or bias the starting state to mimic temperature and impurities.

Evolve under the same local rules.

Average targeted measurements across a few such runs to get reliable macroscopic numbers.

5) Field theories and emergent phenomena (beyond simple particles)

Principle used: Encode lattice versions of complex theories (gauge fields, spin liquids) and evolve them directly.

Nature of the opportunity: Explore regimes of physics that are notoriously hard for classical methods but define limits of materials and devices.

How it works (simple):

Lay down a grid where each site/link has a small local rule set.

Evolve to watch emergent behavior (confinement, topological order).

Read global signatures that diagnose phases and transitions.

The nature of the opportunity (pulled together)

Native fit: The problem is “how does a quantum system change?” A quantum computer is purpose-built to answer exactly that question.

Scale with grace: As systems get bigger and more entangled, classical cost can explode; the quantum simulator keeps using local rules and avoids that particular wall.

Interrogate at will: Pause, perturb, rewind ideas, and ask targeted questions—all in software, before you spend in the lab.

From models to mechanisms: You move from fitting curves to understanding mechanisms, which makes optimization and control far more reliable.

Ultra-simple mental model

Imagine a high-fidelity flight simulator, but for electrons and atoms. Instead of building the plane each time, you load the physics, fly a thousand missions under different weather and pilot inputs, and read exactly the gauges you care about. Hamiltonian simulation is that—a flight simulator for quantum matter.

Principle 7 — Block-Encoding and Quantum Linear Algebra (do “matrix math” on entire spaces at once)

Definition (what it is)

Block-encoding is a way to hide a big matrix inside a quantum operation so that the matrix becomes a “block” of a larger unitary. Once a matrix is block-encoded, a quantum computer can apply many useful functions of that matrix—like its inverse, its exponential, its sign, or a polynomial of it—directly to a quantum state. A companion toolkit called quantum singular value transformation lets you filter, amplify, or bend the spectrum of that matrix in a controlled way. In plain terms: it is a general method for doing linear algebra—matrix powers, filtering, solving, preconditioning—as native quantum operations.

Business gist (why this matters)

A huge amount of analytics, modeling, and optimization is linear algebra: solve a system, find dominant directions, filter noise, compress data, propagate dynamics, price risks, fit models. Classically, these jobs can become the bottleneck as data grows or as the math gets ill-conditioned. Block-encoding turns these linear-algebra chores into short quantum programs that act on all coordinates at once, often with much better scaling in problem size or accuracy. Practically, that means:

Turning “nightly batch” linear solves into interactive steps for the same precision target.

Extracting global structure (principal components, spectral gaps) from massive matrices without scanning every row or column.

Composing powerful pipelines: filter a spectrum, then invert what remains, then measure just the business quantity you care about—without ever materializing the full result vector.

Scientific explanation (plain but precise)

A matrix becomes a knob inside a unitary. You build a slightly larger quantum operation whose top-left corner equals your matrix (scaled to fit). That is block-encoding: the matrix is now callable as part of a clean, reversible operation.

Once encoded, functions are free. Using quantum singular value transformation, you can apply almost any smooth function to the singular values of that matrix. Think “take an inverse on the useful part of the spectrum,” or “squash large values, boost small ones,” or “zero out the junk.”

Work in the spectrum, not in the coordinates. Classical algorithms push numbers around coordinate by coordinate. Block-encoding lets you surgically manipulate the spectrum directly, which is where most linear-algebra difficulty actually lives.

One pass touches every direction. A quantum state represents a whole vector at once. When you apply a block-encoded function, you transform every coordinate simultaneously. No loops over rows or columns.

You read only what matters. Instead of dumping the full transformed vector, you measure a small number of overlaps or averages that map to business metrics: a risk number, a regression coefficient, an error norm, a recommendation score.

One deep, concrete example (in everyday language)

Problem: You have a giant linear system. It arises from pricing a portfolio under many correlated factors, or from fitting a regularized regression on a very wide dataset. Classically, the solve dominates your runtime and memory.

Block-encoding mindset:

Wrap the matrix into a gate. Build a short quantum routine that, when called, behaves as if it had multiplied by your matrix, but done reversibly and safely inside a larger operation.

Choose the spectral surgery. You want the effect of “apply the inverse,” but only on the reliable part of the spectrum to avoid blowing up noise. With quantum singular value transformation you program exactly that: invert where it is safe, gently damp where it is not.

Apply it to the whole vector at once. Load the right-hand side vector as a quantum state. Run the spectral surgery routine. Now your state encodes the solution as amplitudes.

Read the number you care about. Instead of printing the whole solution, you measure a small statistic: a particular coefficient, a confidence measure, or a portfolio risk number. If you need another statistic, you repeat the short readout, not the whole solve.

Why this beats classical in spirit:

You never iterate over rows or columns. You operate in the spectrum—the heart of the difficulty—using a fixed, shallow template. You also avoid materializing large outputs. For many tasks, the business answer is a scalar or a few scalars, not the full vector; quantum lets you go straight to those after a single spectral operation.

Five opportunity patterns powered by block-encoding and quantum linear algebra

(Each: the principle used → nature of the opportunity → simple “how it works.” No formulas.)

1) Fast linear solves for modeling and calibration

Principle used: Block-encode the system matrix; apply a controlled version of its inverse on the stable part of the spectrum.

Nature of the opportunity: Pricing, risk, least-squares, and regularized regression often reduce to a large linear solve; this is the wall in many pipelines.

How it works (simple):

Build a callable gate for the matrix using sparse access or factor oracles.

Program a spectral routine that behaves like “invert if trustworthy, damp if not.”

Apply once to a state encoding the right-hand side; read the business metric directly.

2) Principal components and low-rank structure extraction

Principle used: Use singular value transformation as a spectral filter to keep only the largest components and suppress the rest.

Nature of the opportunity: Dimensionality reduction, noise removal, and feature extraction on very large, tall-and-wide datasets where classical SVD strains memory and time.

How it works (simple):

Block-encode the data covariance or a related matrix.

Program a filter that passes only the top singular values.

Measure overlaps to recover the few directions that explain most of the variance.

3) Graph and network analytics at scale

Principle used: Block-encode the graph Laplacian or adjacency and shape its spectrum to expose clusters, bottlenecks, or central nodes.

Nature of the opportunity: Community detection, influence scoring, and reliability analysis on massive interaction graphs without full eigen-decompositions.

How it works (simple):

Wrap the graph operator as a gate.

Apply spectral sharpeners that accentuate gaps and smooth noise.

Query a handful of statistics that reveal communities or weak links.

4) Stable filtering and preconditioning

Principle used: Implement a custom spectral preconditioner as a small quantum routine, improving conditioning before any “solve-like” step.

Nature of the opportunity: Many hard problems are hard because the matrix is ill-conditioned; good preconditioning turns a failing solve into a fast one.

How it works (simple):

Encode a preconditioner as its own block-encoded operation.

Compose preconditioner and main operator as a short sequence.

Proceed with the spectral routine; fewer rounds, better accuracy.

5) Time-propagation, diffusion, and control through matrix functions

Principle used: Real-world evolution rules can be written as functions of a matrix (for example an exponential). Block-encoding plus singular value transformation applies that function directly.

Nature of the opportunity: Simulate diffusion in networks, propagate uncertainties, or apply smoothing and deblurring kernels without step-by-step integration.

How it works (simple):

Block-encode the generator of your process.

Program the desired function (for example a smoothing kernel) as a spectral mask.

Apply once; measure targeted summaries rather than full states.

The nature of the opportunity (pulled together)

Act where the difficulty lives: in the spectrum, not in the coordinates.

Touch everything at once: a single routine transforms the entire space, not one row at a time.

Compose powerful pipelines: invert here, filter there, then read only what matters—no need to materialize giant outputs.

Scale with accuracy in mind: many routines trade the classical dependence on tiny error bars for much milder quantum dependence through spectral programming.

Ultra-simple mental model

Imagine your matrix as a huge mixing board with thousands of sliders. Classically, you move sliders one by one to shape the sound. With block-encoding, you snap the whole board into a programmable box. Now you can say: “boost only the strong notes, mute the hiss, slightly invert the mids,” and the box does it to every channel at once. When it is done, you do not export all tracks—you press a button that tells you the one loudness number you actually needed for your decision.

Principle 8 — Quantum Walks (ballistic exploration of networks with built-in “don’t-waste-time” dynamics)

Definition (what it is)

A quantum walk is the quantum version of a random walk on a graph or network. Instead of wandering by bumping around randomly (like heat diffusing), a quantum walk propagates like a wave: it spreads coherently, carries directional memory in its phase, and uses interference so that unhelpful paths cancel while promising directions reinforce. The result is a style of exploration that is often ballistic rather than diffusive—you cover ground faster and target regions more effectively.

Business gist (why this matters)

Many hard problems look like “move around a huge network and find something rare” or “scan a giant state space for the good regions.” Classical random walks are slow and forgetful; they meander, re-visit nodes, and waste steps. Quantum walks bias exploration without needing a global map:

Faster time to signal: Reach targets and mix across large graphs in fewer steps than random walks, often by about the square root of the classical time for broad families of graphs.

Better global coverage: Because motion is wave-like (not Brownian), you revisit less and progress more, which is crucial when states or nodes are expensive to probe.

General template: Many search, sampling, ranking, and matching routines can be reframed as “walk until you see the pattern”—and a quantum walk gives that template a provable speedup in the black-box sense for many cases.

Scientific explanation (plain but precise)